Since machine learning algorithms are hungry for data to process, I contributed to build up various datasets. Some are listed below, other will be added soon.

[2025] IceCloudNet: 3D reconstruction of clouds from 2D SEVIRI images

IceCloudNet: 3D Reconstruction of Cloud Ice from Meteosat SEVIRI is now published in the 𝘈𝘳𝘵𝘪𝘧𝘪𝘤𝘪𝘢𝘭 𝘐𝘯𝘵𝘦𝘭𝘭𝘪𝘨𝘦𝘯𝘤𝘦 𝘧𝘰𝘳 𝘵𝘩𝘦 𝘌𝘢𝘳𝘵𝘩 𝘚𝘺𝘴𝘵𝘦𝘮𝘴 journal of the American Meteorological Society. 5 years of cloud data and code to produce more are now public, enabling study of 𝐜𝐥𝐨𝐮𝐝 𝐟𝐨𝐫𝐦𝐚𝐭𝐢𝐨𝐧 𝐚𝐧𝐝 𝐝𝐞𝐯𝐞𝐥𝐨𝐩𝐦𝐞𝐧𝐭 at very large scale as well as validation of 𝐡𝐢𝐠𝐡-𝐫𝐞𝐬𝐨𝐥𝐮𝐭𝐢𝐨𝐧 𝐰𝐞𝐚𝐭𝐡𝐞𝐫 𝐚𝐧𝐝 𝐜𝐥𝐢𝐦𝐚𝐭𝐞 𝐦𝐨𝐝𝐞𝐥 𝐬𝐢𝐦𝐮𝐥𝐚𝐭𝐢𝐨𝐧𝐬.

In brief, IceCloudNet: 👉 reconstructs in 3D Clouds’ key parameters such as ice water content and ice crystal number concentration.

👉 transforms globally-available 2D images from Meteosat MSG instrument SEVIRI into large-scale 3D scans as obtained by LiDARs on-board the CALIPSO and CloudSat missions.

👉 demonstrates how GenerativeAI can extend the life of satellites, expand the covered area, and empower scientists with new tools to study natural phenomena.

This was an effort led by Kai Jeggle with David Neubauer and Ulrike Lohmann at ETH Zurich and Federico Serva, Mikolaj Czerkawski and myself at ESA Φ-lab

[ Paper in AI for the Earth systems / arxiv / 5 years of cloud data / open-source code / video interview of Kai Jeggle ]

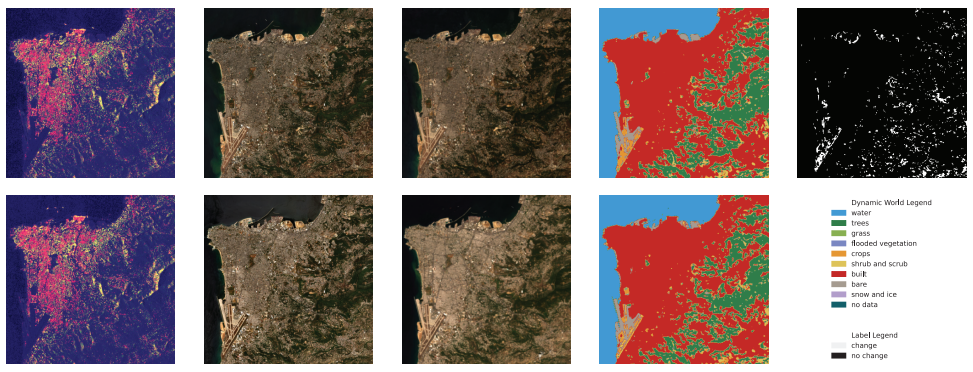

[2025] Multi-modal Multi-class Change Detection Dataset (MUMUCD)

Coming soon!

Coming soon!

[ GRSL article / doi / MUMUCD: PRISMA + Sentinel-2 Change Detection Dataset ]

If using this dataset, please cite: MUMUCD: A Multi-modal Multi-class Change Detection Dataset, F. Serva, A. Sebastianelli, B. Le Saux, F. Ricciuti, IEEE Geoscience and Remote Sensing Letters, July 2025.

[2022] 2022 Data Fusion Contest: Semi-supervised Learning for Land Cover Classification

With Javiera Castillo-Navarro, Ronny Haensch, Claudio Persello, Gemine Vivone, Sébastien Lefèvre and Alexandre Boulch, we held a competition for semi-supervised learning in Earth observation based on MiniFrance data, in the frame of the IEEE GRSS Data Fusion Contests: the DFC2022. Along with VHR EO imagery and landcover classes, we added digital elevation models to the new MiniFrance-DFC22 data. Full description in the GRSM Paper announcement.

[ DFC2022 @ IEEE GRSS / DFC 2016 Results / DFC2022 data on IEEE DataPort / Starter toolkit code / DFC2022 data directly in torchgeo ]

[2022] Hyperview Challenge: Estimating Soil Parameters from Hyperspectral Images

![]()

With Jakub Nalepa, Nicolas Longépé, and colleagues from New Space company KP Labs and ESA organised the HyperView challenge for geology and agriculture from space, leveraging hyperspectral imagery (check the video). Hyperview “Seeing beyond the visible” was powered by the ai4eo.eu platform and held as a Grand Challenge at ICIP 2022 (Hyperview description in the ICIP paper).

You are free to use and/or refer to the HYPERVIEW dataset in your own research (non-commercial use): hyperview can be found here and the (incomplete) PapersWithCode entry is here. If using this dataset, please cite: The Hyperview Challenge: Estimating Soil Parameters from Hyperspectral Images Nalepa et al. IEEE ICIP Bordeaux, France, October 2022

@INPROCEEDINGS{9897443,

author={Nalepa, Jakub and {Le Saux}, Bertrand and {Longépé}, Nicolas and Tulczyjew, Lukasz and Myller, Michal and Kawulok, Michal and Smykala, Krzysztof and Gumiela, Michal},

booktitle={2022 IEEE International Conference on Image Processing (ICIP)},

title={The Hyperview Challenge: Estimating Soil Parameters from Hyperspectral Images},

year={2022},

pages={4268-4272},

doi={10.1109/ICIP46576.2022.9897443}

}

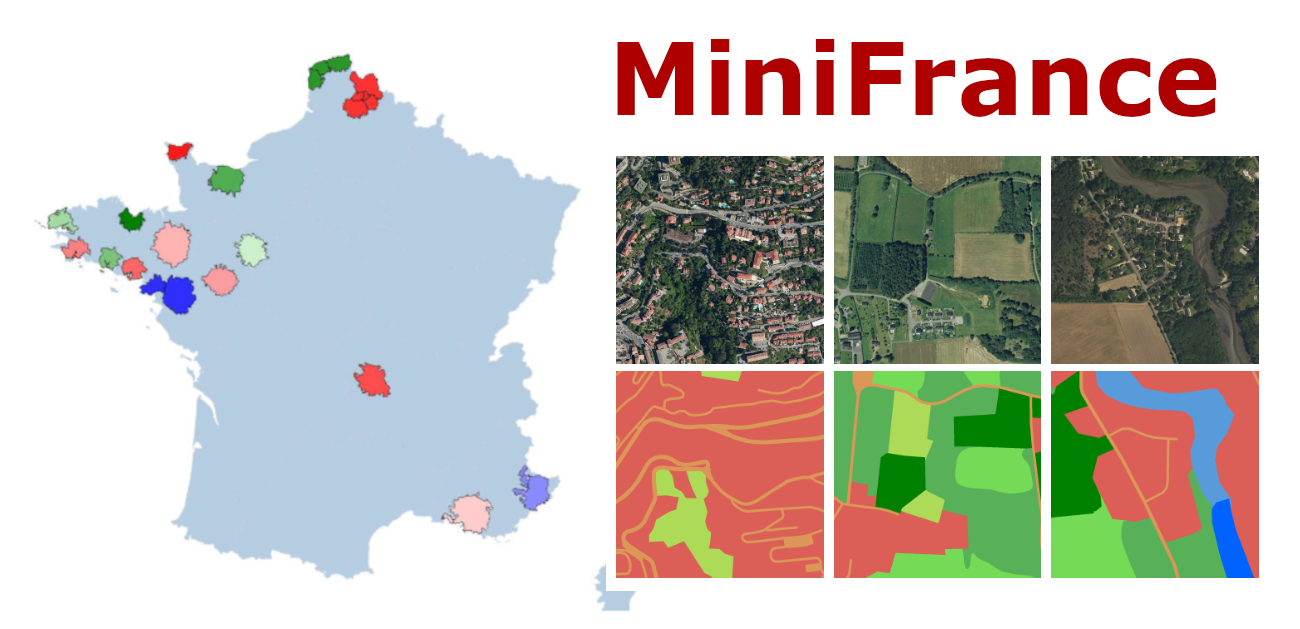

[2020] MiniFrance Dataset

In an effort led by Javiera Castillo-Navarro, with Alexandre Boulch, Nicolas Audebert, Sébastien Lefèvre and myself, we introduced the first large-scale dataset and benchmark for semi-supervised semantic segmentation in Earth Observation, the MiniFrance suite.

MiniFrance in numbers: 2000 very high resolution aerial images, 50cm/pixel resolution, 1 squared kilometer per tile, accounting for more than 200 billions samples (pixels); 16 cities and surroundings over France, with various climates, different landscapes, and both urban / countryside; 14 land-use classes are considered, corresponding to the 2nd semantic level of UrbanAtlas. Hence the success, with nearly 200 000 downloads and a 5-star rating on IEEE Dataport!

[ open-access dataset / paper in Machine Learning journal / open-access arxiv / EO Database entry / Further used in the Data Fusion Contest 2022 of the IEEE GRSS! ]

Copyright: The images in this dataset are released under IGN’s “licence ouverte”. More information can be found at http://www.ign.fr/institut/activites/lign-lopen-data The maps used to generate the labels in this dataset come from the Copernicus program, and as such are subject to the terms described here: https://land.copernicus.eu/local/urban-atlas/urban-atlas-2012?tab=metadata Label maps are released under Creative-Commons BY-NC-SA. If using this dataset, please cite: Semi-Supervised Semantic Segmentation in Earth Observation: The MiniFrance Suite, Dataset Analysis and Multi-task Network Study J. Castillo-Navarro, B. Le Saux, A. Boulch, N. Audebert and Sébastien Lefèvre, Machine Learning journal, 2021

@article{castillo2020minifrance,

title=,

author={Castillo-Navarro, Javiera and Audebert, Nicolas and Boulch, Alexandre and {Le Saux}, Bertrand and Lef{\`e}vre, S{\'e}bastien},

journal={Machine Learning},

vol={111},

year={2021}

}

[2020] SEN12-FLOOD Dataset

![]()

With Clément Rambour et al., we released SEN12-FLOOD, a SAR-Multispectral dataset for classification of flood events in image time-series. And the dataset is also available on Radiant Earth platform: MLHub.earth

[2019] High-Res. Semantic Change Dataset (HRSCD)

![]()

With Rodrigo Daudt et a., we also released HRSCD, a large-scale dataset for semantic change detection at high-resolution (0.5m/pixel). [ HRSCD website @ Rodrigo / HRSCD website @ DataPort ].

[2019] Data Fusion Contest 2019 (DFC2019)

![]()

The DFC2019 organised by IADF TC (Myself, Naoto Yokoya and Ronny Hänsch) and Johns Hopkins University (Myron Brown) was a benchmark about Large-Scale Semantic 3D Reconstruction, and involved 3D reconstruction, 3D prediction, and semantic segmentation in 2D and 3D. [ DFC2019 @ IEEE GRSS / DFC2019 @ DataPort ]

[2018] Onera Satellite Change Detection (OSCD) Dataset

![]()

Onera Satellite Change Detection (OSCD) Dataset

With Rodrigo Daudt, we released the first dataset for training deep learning models for pixelwise change detection over Sentinel-2 data. It comprises 24 registered pairs of multispectral images from 2015 and 2018, all over the world. [ OSCD paper @ IGARSS’18 / Prime OSCD website @ Rodrigo / Alternate OSCD website @ DataPort / Related: CNNs for Change Detection / Evaluation @ DASE ]

This dataset contains modified Copernicus data from 2015-2018. Original Copernicus Sentinel Data available from the European Space Agency (https://sentinel.esa.int). Change label maps are released under Creative-Commons BY-NC-SA. If using this dataset, please cite: Urban Change Detection for Multispectral Earth Observation Using Convolutional Neural Networks R. Caye Daudt, B. Le Saux, A. Boulch, and Y. Gousseau IEEE IGARSS Valencia, Spain, July 2018

@inproceedings{daudt2018urban,

author = { {Caye Daudt}, R. and {Le Saux}, B. and Boulch, A. and Gousseau, Y.},

title = {Urban Change Detection for Multispectral Earth Observation Using Convolutional Neural Networks},

booktitle = {IEEE Int. Geoscience and Remote Sensing Symposium (IGARSS)},

address = {Valencia, Spain},

month = {July},

year = {2018},

}

[2018] Data Fusion Contest 2018 (DFC2018)

![]()

The DFC2018 organised by IADF TC (Myself, Naoto Yokoya and Ronny Hänsch) and Houston University (Saurabh Prasad) was a benchmark about urban land use and land cover classification (or semantic segmentation). It used multispectral LiDAR point cloud data (intensity rasters and digital surface models), hyperspectral data, and very high-resolution RGB imagery. As such, it still is a relevant becnhmark for hyperspectral classification and data fusion. [ DFC2018 @ IEEE GRSS / DFC2018 @ DataPort ]

[2017] Data Fusion Contest 2017 (DFC2017)

![]()

The DFC2017 organised by IADF TC (Devis Tuia, Gabriele Moser, and myself) and Benjamin Bechtel and Linda See from the WUDAPT initiative.

It focused on global land use mapping using open data. Participants were provided with remote sensing (Landsat and Sentinel2) data and vector layers (Open Street Map), as well as a 17 classes ground reference at 100 x 100m resolution over five cities worldwide (Local climate zones, see Stewart and Oke, 2012): Berlin, Hong Kong, Paris, Rome, Sao Paulo. The task was to provide land use maps over four other cities: Amsterdam, Chicago, Madrid, and Xi’an.

[ DFC2017 @ IEEE GRSS / DFC 2017 Results / download data from IEEE DataPort / Open-access outcome paper in JSTARS / legacy website ]

If using this dataset, please cite the outcome paper: N. Yokoya, P. Ghamisi, J. Xia, S. Sukhanov, R. Heremans, I. Tankoyeu,B. Bechtel, B. Le Saux, G. Moser & D. Tuia, Open Data for Global Multimodal Land Use Classification: Outcome of the 2017 IEEE GRSS Data Fusion Contest, IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens 11 (5), May 2018.

[2016] Data Fusion Contest 2016 (DFC2016)

![]()

The DFC2016 organised by IADF TC (Devis Tuia, Gabriele Moser, and myself) and Deimos Imaging. It was opened on January 3, 2016 and the submission deadline was April 29, 2016. Participants submitted open topic manuscripts using the VHR and video-from-space data released for the competition. 25 teams worldwide participated to the Contest.

The dataset comprised:

- VHR images (DEIMOS-2 standard products) acquired at two different dates, before and after orthorectification: Panchromatic data at 1 m resolution and Multispectral data at 4 m re.

- a High-Definition Video acquired from the International Space Station (ISS), at 1-m spatial resolution The data cover an urban and harbor area in Vancouver, Canada, and were acquired and provided for the Contest by Deimos Imaging and Urthecast.

[ DFC2016 @ IEEE GRSS / DFC 2016 Results / Open-access outcome paper in JSTARS ]

If using this dataset, please cite the outcome paper: Mou, L.; Zhu, X.; Vakalopoulou, M.; Karantzalos, K.; Paragios, N.; Le Saux, B.; Moser, G. & Tuia, D., Multi-temporal very high resolution from space: Outcome of the 2016 IEEE GRSS Data Fusion Contest, IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens 10 (8), August 2017.

[2015] Data Fusion Contest 2015 (DFC2015)

Coming soon!

Coming soon!

[2013] Christchurch Aerial Semantic Dataset (CASD)

![]()

Christchurch Aerial Semantic Dataset (CASD)

Hicham Randrianarivo and I annotated images from Land Information New Zealand (LINZ) with urban semantic classes: buildings, vehicles and vegetation. Annotations come at object level (shapefiles) and semantic maps (raster masks). All data (images and annotations) are under License CC BY 4.0. [ Report: Cristchurch CASD / Related papers using the dataset: Deformable Part Models for remote sensing / DtMM for Vehicle Detection / Segment-before-detect paper ]

If using this dataset, please cite: Man-made structure detection with deformable part-based models H. Randrianarivo, B. Le Saux, and M. Ferecatu IEEE IGARSS Melbourne, Australia, July 2013

@inproceedings{randrianarivo-13igarss-DPM,

author = {Randrianarivo, H. and {Le Saux}, B. and Ferecatu, M.},

title = {Man-made structure detection with deformable part-based models},

booktitle = {IEEE Int. Geoscience and Remote Sensing Symposium (IGARSS)},

year = {2013},

month = {July},

address = {Melbourne, Australia},

}