My current projects include:

Semantic Surfaces for 3D Data

![]()

With Mathilde Letard from CNRS in Rennes and Peter Naylor from ESA we explore new ways to learn informative representations from 3D Data using Implicit Neural Representations. It results in surfaces with semantic meaning (ground, top of canopy, etc…) which can be used for completion, super-resolution, or continuous 3D modelling. Also involved in Rennes: Dimitri Lague and Thomas Corpetti.

Foundation Models for EO: PhilEO and DOFA

![]()

Foundation models, that is AI models pre-trained at very large scale on massive data, have the power to revolutionise the way we use machine learning, by capturing extensive information from unlabelled data and specialising the pre-trained models on few data for specific tasks (making it parsimonious in labelled data). With the PhilEO team at ESA/Φ-lab (Casper Fibaek, Nikolaos Dionelis, Luke Camilleri, Andreas Luyts) we proposed the PhilEO Bench to assess the capacity of emerging geospatial FMs on a benchmark of diverse tasks. We also propose the PhilEO Foundation Model and PhilEO precursor-FMs, trained at scale on massive Sentinel 2 data: PhilEO-FM is trained with geo-awareness to be more capable. PhilEO is presented at EGU 2024 and IGARSS 2024. I also collaborated with Zhitong Xiong and Xiaoxiang Zhu at TU Munich who develop sensor-agnostic FM named DOFA (Dynamic One-For-All foundation model), a Neural Plasticity-Inspired Foundation Model for Observing the Earth Crossing Modalities.

[ PhilEO Bench project / PhilEO Foundation Model / PhilEO Bench on arxiv / HuggingFace community) / DOFA project / DOFA arxiv ]

IceCloudNet

![]()

Clouds with ice particles play a crucial role in the climate system. Yet, they remain a source of great uncertainty in climate models and future climate projections. Kai Jeggle, David Neubauer, and Ulrike Lohmann from Institute for Atmospheric and Climate Science at ETH Zurich, Federico Serva and Mikolaj Czerkawski, we proposed IceCloudNet to approximate the ice water path at the spatio-temporal coverage of the SEVIRI topview instrument (Meteosat 2nd Gen, MSG). IceCloudNet is a generative convolutional neural network trained on three years of SEVIRI and DARDAR data, where 3D information comes from the CALIPSO mission, which stopped in 2023. Thus, with IceCloudNet it is now possible to generate similar data from MSG, at more frequent time revisit!

[ arxiv / dataset on WDC Climate / NeurIPS’23 / CCAI summary and slides / EGU’24 summary ]

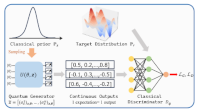

Quantum Generative AI: QGANs and Quantum Diffusion Models

Quantum neural networks has shown interesting properties to learn faster from fewer training samples, hinting to a different generalisation capacity than standard networks. We explore this potential in two works. In Su-Yeong Chang’s PhD, co-supervised with Michele Grossi and Sofia Vallecorsa at CERN, we build Quantum Generative Adversarial Networks (Quantum GANs) with continuous output generation, which allow us to generate an arbitrary number of images similar to a given dataset. With Francesca di Falco, Andrea Ceschini and Massimo Panella from La Sapienza University and Alessandro Sebastianelli from ESA/Φ-lab, we design two variants of hybrid quantum diffusion models: embedding of quantum layers in standard diffusion models, and latent quantum diffusion models, with surprisingly good performances given the size of the models.

[ Quantum GANs (to appear) / Hybrid Quantum Diifusion Models published in Künstliche Intelligenz / Latent Quantum Diffusion Models published in Quantum Machine Intelligence ]

AI-based Forecast of Solar Irradiance for Renewable Energy

![]()

With Alessandro Sebastianelli, Federico Serva and Quentin Paletta from ESA/Φ-lab on the one hand, and on the other hand Andrea Ceschini and Massimo Panella from La Sapienza University (DIET - Department of Information Engineering), we developped and benchmarked methods for AI-based forecasting of solar irradiance from previous days and Meteosat weather data, published in RSE. I also collaborated with Quentin Paletta for to improve spatial domain adaptation of AI solar forecasting models with physics-informed transfer learning.

[ Irradiance AI project / AI Forecast of irradiance published in Remote Sensing of the Environment / Energy Conversion and Management paper on cross-site generalizability of vision-based solar forecasting models with physics-informed transfer learning ]

Physics-aware ML for Weather Forecast

![]()

With Federico Serva we investigate forecasting of weather events. In particular, we’re part of the organising committee of the Weather4Cast competition led by Aleksandra Gruca and David Kreil at NeurIPS in 2022 and 2023, which establish a benchmark for rain prediction from spatio-temporal time-series (i.e. movies). Check the report on the 2022 edition: Weather4cast at NeurIPS 2022: Super-Resolution Rain Movie Prediction under Spatio-temporal Shifts in PMLR. In parallel, we developed Super-resolved rainfall prediction with physics-aware deep learning approaches for rain prediction with Stephen Moran and Begum Demir from TU Berlin.

[ Weather4Cast / Weather4cast at NeurIPS 2022 / Weather4cast Paper 2022 in PMLR / Weather4cast at NeurIPS 2023 / Rain prediction paper at BiDS’23 / ]

Unsupervised AI for Forestry and Biomass

![]()

With the EO4Landscape group of Univerzita Karlova (Charles University) in Pragues: Přemysl Štych, Daniel Paluba, and Jan Svoboda, we investigate AI with no or low supervision to classify forests and trees. With Daniel Paluba (and Francesco Sarti from ESA), we developed an approach to estimate common forest index (LAI, NDVI, etc.) from SAR data (Sentinel 1) so forests can be monitored even in the presence of clouds. With Jan Svoboda (and Peter Naylor from ESA) we worked on land cover classification refinement using Segment-Anything-based image segmentation.

[ Estimating optical vegetation indices with Sentinel-1 SAR data and AutoML / Forest dataset (to appear) / EARSEL’2024 paper on Segment-Anything for Landcover ]

Visual Question & Answering (VQA) for EO data

![]()

In Christel Chappuis’s PhD at EPFL/ECEO, co-supervised with Devis Tuia (EPFL) and Sylvain Lobry (Univ. of Paris), we investigate remote sensing visual question & answering (RSVQA). How to interact easily with Earth observation and geospatial data archives, using natural language and no computer expertise? This is key for empowering people with EO capacities! We explored image-text embedding for RSVQA (ECML-PKDD Workshop paper) and now are moving to advanced Natural Language Processing (NLP) techniques to address times-series of environmental data.

[ image-text embedding paper and video ]

Quantum Computing for Earth Observation

![]()

While at ESA/Φ-lab, I supervised the Quantum Computing for Earth Observation (QC4EO) initiative to bring the power of Quantum Computing to EO. The action was three-fold. 1, we launched research projects on scientific questions to assess the potential of emerging techonologies such as Quantum Machine Learning with CERN announce, Forschungszentrum Jülich, Jagiellonian University/Quantum Cosmos Lab, Nicolaus Copernicus Astronomical Center of the Polish Academy of Sciences etc. 2, we launched two large-scale studies with European groups of Industry and Academic experts, led by Forschungszentrum Jülich and the German Aerospace Center (DLR), to identify EO use-cases for QC and define a roadmap for the next 15 years. 3, we built from scratch a QCxEO community with experts from both fields to stimulate today’s research and prepare tomorrow’s workforce; it included workshop with ELLIS (ellis page / workshop page) or Forschungszentrum Jülich: the High Performance and Innovative Computing for EO workshop, participation to summer schools (IEEE, CINECA, EQAI) and multiple industry or academia events (TERATEC-Thales, QTML, etc.), co-organisation of the ESA Quantum Conference in 2021 and 2023, and edition of special issues in scientific journals (JSTARS special issue on quantum resources for EO).

[ All outputs of Quantum Computing for EO study / All outputs of Quantum Advantage for EO study / HPIC workshop slides / report / overall presentation at QTML’2023 ]

Older projects can be found here